Nuget packages can be tricky to update if you don't pay attention to your dependency chains.

The Importance of Layered Software Testing

Effective software testing requires a layered approach rather than relying on a single best practice. Different categories of tests—unit, service-scoped integration, component integration, and end-to-end—each offer unique benefits. This strategy ensures robust coverage, addressing various types of problems and enhancing overall confidence in software quality.

Achieving Software Quality Through Layered Practices

Software quality thrives not on excelling in one area but on maintaining competence across multiple layers of delivery. Each layer acts as a filter, removing around two thirds of defects. Focusing solely on one element, like exhaustive testing, leads to diminishing returns. Collaborative approaches across testing, CI/CD, and requirements prevent issues effectively.

Turning requirements into product

I'm a software engineer at heart - I love writing code, deploying functionality, and seeing the impact it has. Even when I'm just shaving a few seconds off a repetitive task by removing an unnecessary button click - it's all about small improvements, often. So it might be surprising to hear, most problems which impact … Continue reading Turning requirements into product

Sagas and distributed transactions

Controversial opinion I haven't ever had any useful discussion about sagas with anyone at any company I've ever worked with. I've found the people who bring sagas up and make a song and dance about them, are generally the same people who tend to over-engineer solutions and have difficulty 'keeping it simple'. I'd like to … Continue reading Sagas and distributed transactions

Code Reviews Without Pull Requests

I'm going to put my most controversial opinion right out there and wave it around, because I am sick of internal development teams blindly trusting code reviews performed on pull requests. Don't get me wrong. I think there are some very clever UI's provided by most providers (GitHub, Azure DevOps, Bitbucket, etc.) but no matter … Continue reading Code Reviews Without Pull Requests

Delivery Focused Software Teams

Software delivery in many organisations is still far too waterfall, inefficient, and often unhealthy to be a part of. The myriad of methodologies which can be applied in different combinations to achieve something more efficient can be overwhelming. Normal organisational structures deny people the authority to make the efficiency savings which seem logical to them. … Continue reading Delivery Focused Software Teams

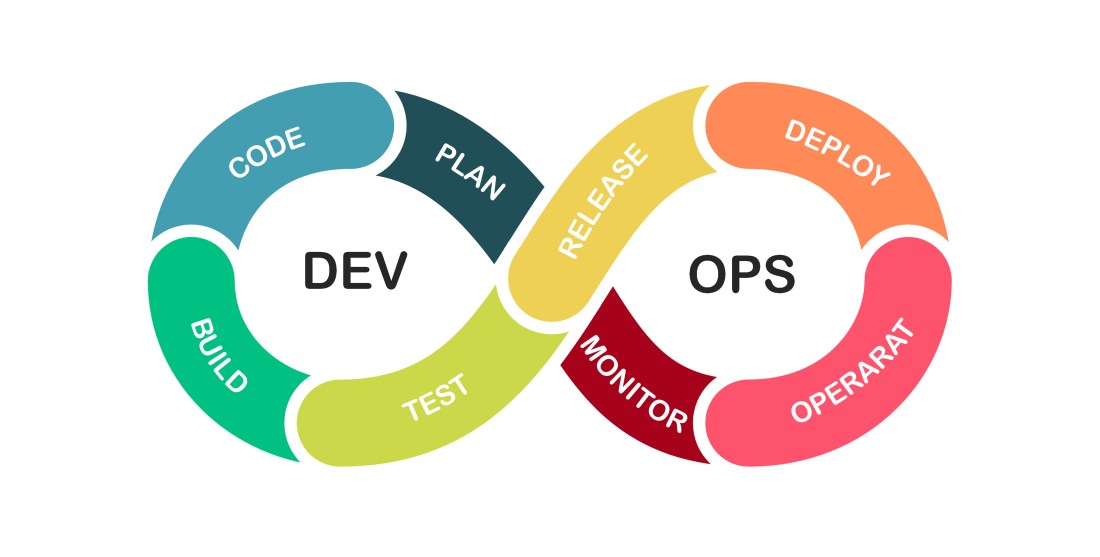

CI/CD

I get so frustrated when I see perfectly talented DevOps engineers building pipelines which drive big bang thinking, and calling it CI/CD. Can you all please stop? Continuous integration and continuous deployment are two very special principals which drive high quality, prevent bugs reaching production, and generally help things get delivered quicker. Automation alone does … Continue reading CI/CD

Helpful.Hosting.WorkerService.Windows/Systemd

A simple but flexible package to turn your commandline project into either a Windows Service or a Linux systemd service.

How to Design Decoupled Systems

Decoupled architecture is one of the biggest enablers of agility, speedy delivery, and high quality; yet many software designers have limited experience of what decoupled looks like. I hear people talk about different amounts of indirection and API layers, without understanding that these don't inherently decouple. I've been confronted with the insistence that because there's … Continue reading How to Design Decoupled Systems